Modern software systems are subject to uncertainties, such as dynamics in the availability of resources or changes of system goals. Self-adaptation enables a system to reason about runtime models to adapt itself and realises its goals regardless of uncertainties. We propose a novel modular approach for decision making in self-adaptive systems that combines distinct models for each relevant quality with runtime simulation of the models. Distinct models support on the fly changes of goals. Simulation enables efficient decision making to select an adaptation option that satisfies the system goals. The tradeoff is that simulation results can only provide guarantees with a certain level of accuracy. We demonstrate the benefits and tradeoffs of the approach for a service-based telecare system.

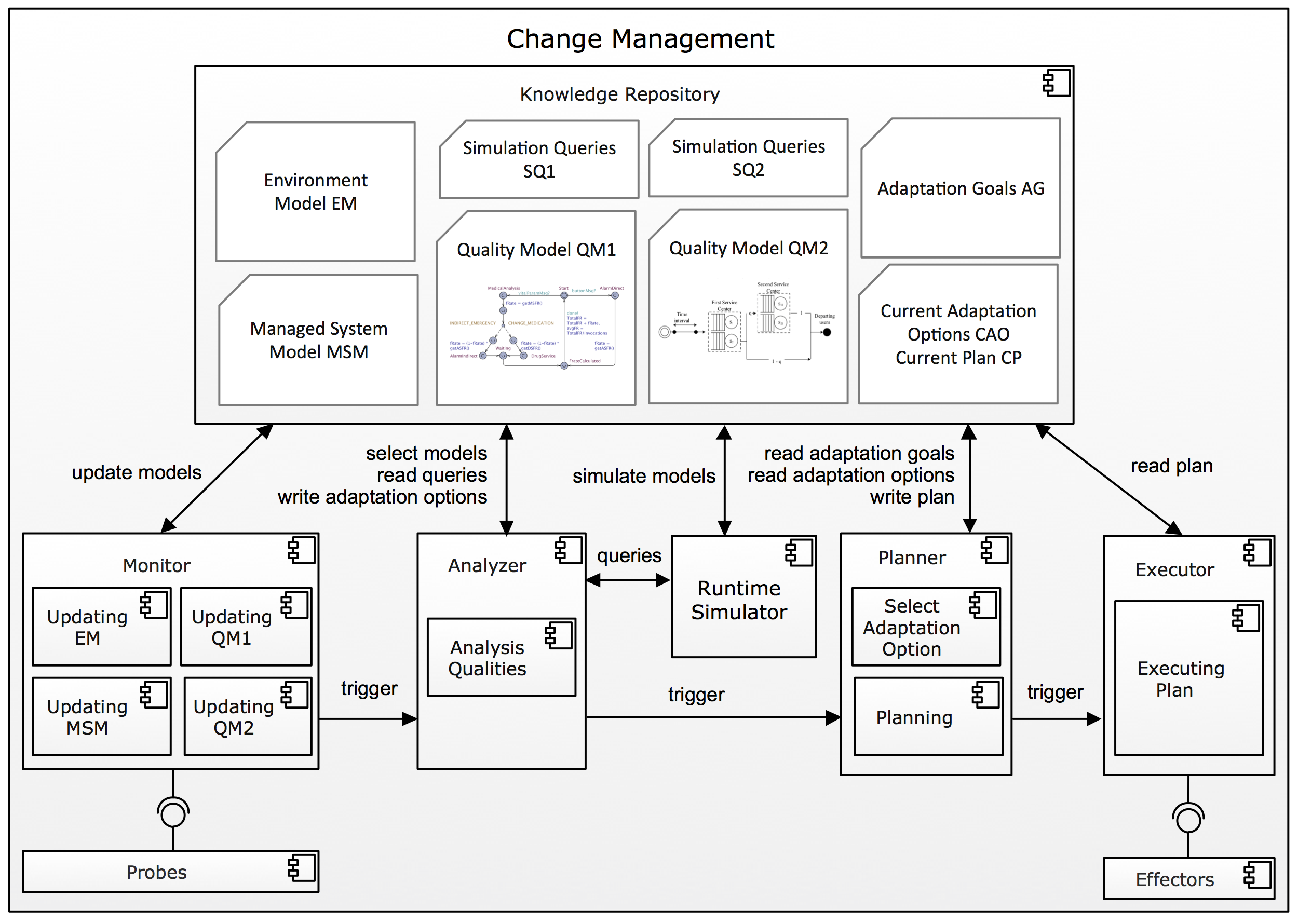

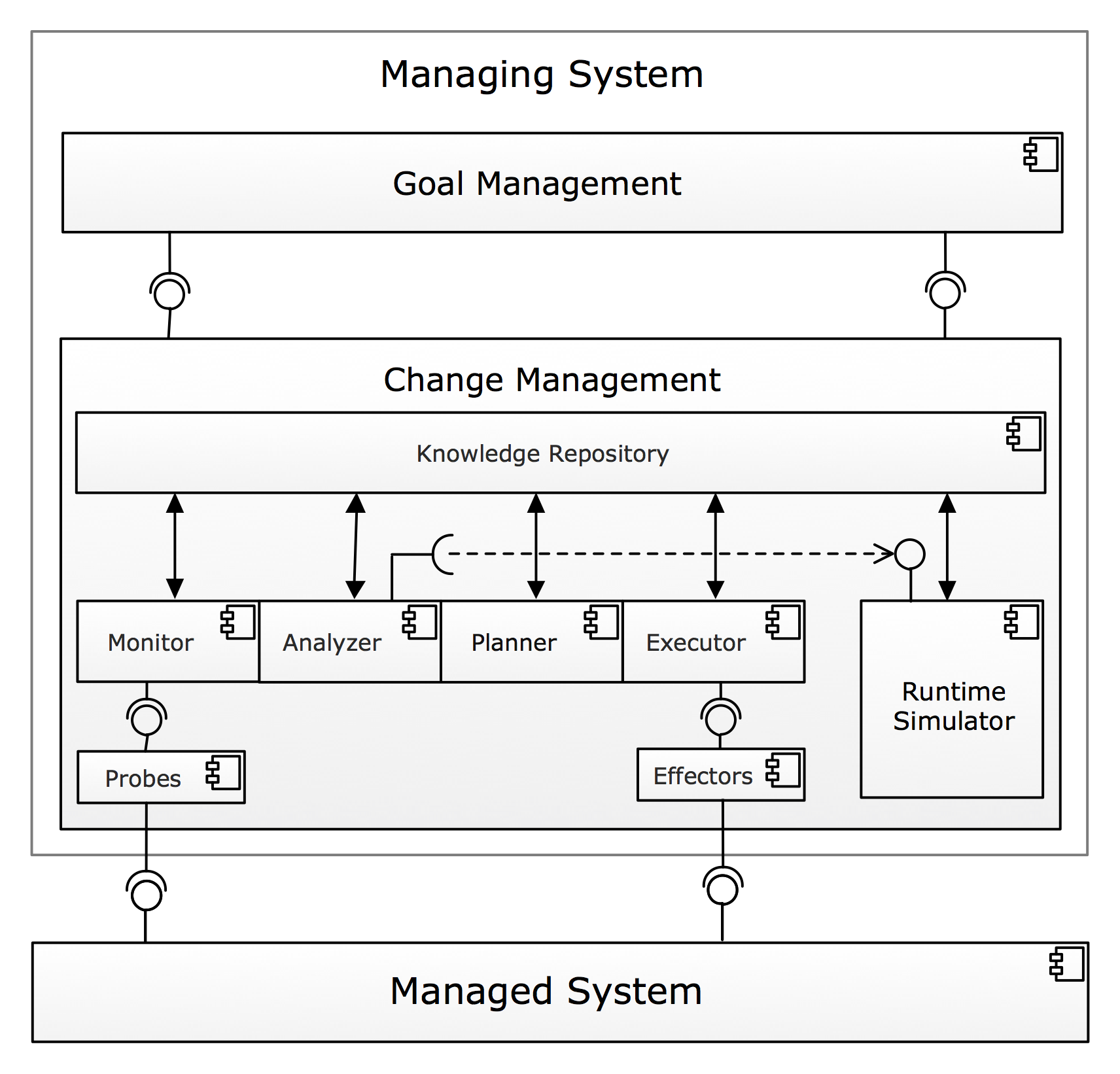

We study architecture-based self-adaptation, where a self-adaptive system comprises two parts: a managed system that provides the domain functionality, and a managing system that monitors and adapt the managed system. Furthermore, we look at managing systems that are realised with a MAPE-K based feedback loop that is divided in four components: Monitor, Analyze, Plan, and Execute, that share common Knowledge (hence, MAPE-K). Knowledge comprises models that provide a causally connected self-representation of the managed system referring to the structure, behavior, goals, and other relevant aspects of the system.

- Models created in Uppaal.

- Simulation Code of Tele Assistance System

Download:

Tele Assistance System

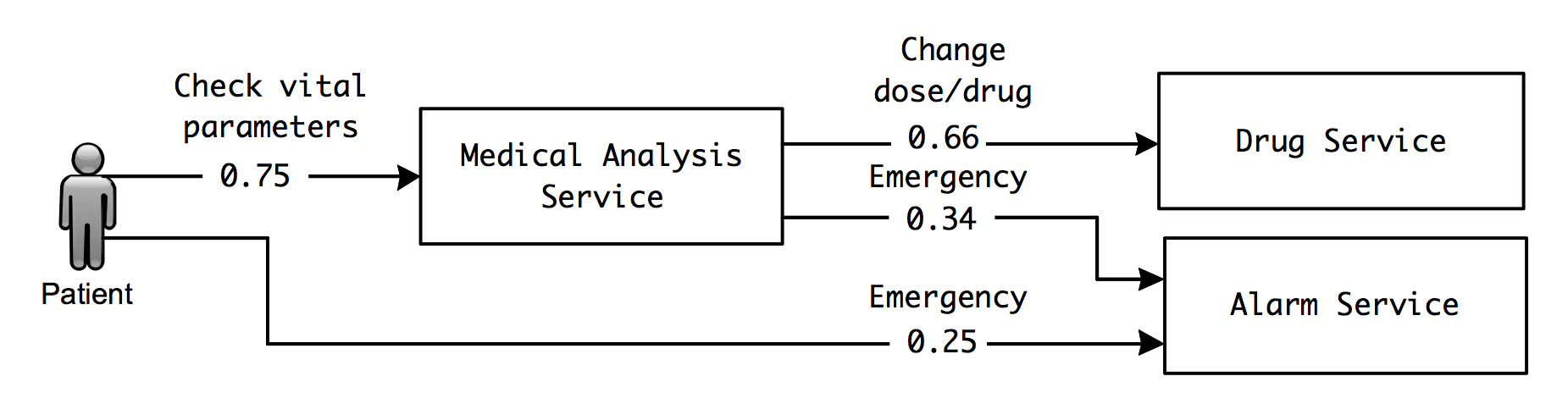

The Tele Assistance System (TAS) provides health support to users in their home. Users wear a device that uses third-party remote services from health care, pharmacy, and emergency service providers. Fig. 1 shows the TAS workflow that comprises different services. The workflow can be triggered periodically to measure the userís vital parameters and invoke a medical analysis service. Depending upon the analysis result a pharmacy service can be invoked to deliver new medication to the user or change his/her dose of medication, or the alarm service can be invoked, dispatching an ambulance to the user. The user can also invoke the alarm service directly via a panic button.

Multiple service providers provide concrete services for Alarm service, Medical analysis service, and Drug service, abbr. by AS, MAS, and DS respectively. Concrete services have a failure rate, invocation cost and service time. As a default behavior we assume that TAS selects a particular configuration of services, e.g. {AS3, MAS4, DS1}. We consider two types of uncertainties in TAS. The first one is related to the actions performed to the system. As shown in Fig. 1, we assume that on average 75% of the requests are (automatically triggered) checks of vital parameters and 25% are emergency calls invoked by the user. After checking vital parameters, depending upon the result 66% of the requests invoke the drug service, and 34% of the requests invoke the alarm service. However, these probabilities can change over time. The second uncertainty is related to the concrete services of the system. These uncertainties include the availability of services and quality parameters of running services. Depending upon load on the system, the network and other conditions the initial values of the failure rates and response times of the services are subject to change.

We apply self-adaptation to TAS to deal with uncertainty related to failures, cost, and service time. Concretely, we introduced our approach with two scenarios. In the first scenario, only failure rates and costs are used to adapt TAS. In the second scenario, we extend the first scenario with considering service times as well. An offline analysis may find a configuration which supports the set of requirements. But as there are many uncertainties associated with TAS, there is a need for adapting the current configuration at runtime based on the actual values of these uncertainties.

Modular Decision Making Approach For Self-adaptation

Fig. 2 shows the high-level overview of the model for self-adaptation that we use in our research.

|

The Managed System is the software that is subject of adaptation. At a given time the managed system has a particular configuration that is determined by the arrangement and settings of the running components that make up the Managed System. The set of possible configurations can change over time. We refer to the different choices for adaptation from a given configuration as the adaptation options, or alternatively the possible configurations. Adapting the managed system means selecting an adaptation option and changing the current configuration accordingly. We assume that the Managed System is equipped with probes and effectors to support monitoring the system and apply adaptations. The Managed System is deployed in an environment that can be the physical world or other computing elements that are not under control of the Managed System. We consider self-adaptive systems where the Managed System and the environment in which the system operates may expose stochastic behavior. The Managing System comprises two sublayers: Change Management and Goal Management. Change Management adapts the Managed System at runtime. Central to Change Management are the MAPE components of the feedback loop that interact with the Knowledge Repository. The MAPE components can trigger one another, for example, the Analyser may trigger the Planner once analysis is completed. The Analyser is supported by a Runtime Simulator that can run simulations on the models of the Knowledge Repository during operation. Goal Management enables to adapt Change Management itself. Goal Management offers an interface to the user to change the adaptation logic, for example, to change the models of the knowledge repository, or change the MAPE functions. Changing the adaptation software should be done safely, e.g., in quiescent states. |